In previous parts I looked at strategy, the RFP and site hosting, design and the CMS and audio.

This part will cover news.

As mentioned in a previous part, web news staff were part of the newsroom, reporting to the Head of News. Our choice of an integrated model with a core of web-focussed staff worked very effectively, particularly when planning events like election coverage or coverage of the release of the government budget. It also ensured continued alignment with editorial standards and culture.

While some other broadcasters had initially set up separate interactive departments—the BBC being the most notable in the early 2000s—there seemed to be a lot of duplication in this model, and high costs. It also robbed existing staff of the chance to learn new skills. The BBC moved to a unified newsroom in 2008.

RNZ’s distributed and unified model was replaced with a separate digital structure in 2015, and the news team was rolled back into the newsroom in 2023.

News

In the 2005 iteration of the site, the published news was mostly radio copy that had been lightly edited.

At that time, the contracts with international wire services placed clear limits on RNZ’s use of their content—it was restricted to live and time-bound use, with no archiving of content. Also, the budget was insufficient to extend to providing a comprehensive and modern news service. However, it was possible to use domestically generated content.

After some discussion, the scheme we arrived at was to publish ‘bulletins’ of content to the site. Each bulletin was a collection of stories, in order of editorial importance.

These bulletins would be prepared alongside the preparation of text bulletins that were compiled and supplied to RNZ’s commercial news clients. This work was done by a commercial news sub-editor, whose role was to assemble and check this work.

This was a good use of existing operations, and re-use of existing radio content. In these early days, web news copy was largely a replication of what was used on air.

Each new published bulletin would replace the previous one on the website, with only the latest batch of stories being visible in the news section. Older bulletins were purged after about a week, with any links to the content remaining active for that time.

Obviously this wasn’t ideal, because we ended up with duplicates of stories from earlier bulletins that had different URLs. The duplicate URL issue was resolved in 2006.

With the introduction of a new CMS in 2008, and contracts having been sorted out, it was possible to have a single unique URL with a headline for every story, regardless of how many times it was updated, and for stories to remain forever.

The number of staff working on web content had also increased, and the focus was on longer, more comprehensive stories, still based on RNZ’s original reporting. Reporters were encouraged to provide web-length versions of their stories, and in some cases web-only material was planned for events like coverage of the government’s budget release. Stories would be updated throughout the day as a story evolved, getting a new headline at that time. Links to the old headline would redirect to the updated story automatically.

My team and I worked closely with news staff on planning event coverage, and adding features to the CMS to support their story-telling needs.

The most valuable asset any website has is its archive of past content. When I left in 2016, most of the traffic on any given day was to content not published ‘today’. The majority of this was in the last few weeks, mostly driven by external links, search engines and related story links.

I have already talked about SEO being used to raise RNZ’s profile in Google search and Google news. A major upgrade to the technical SEO was done in 2014, and the use of permanent URLs is obviously important for search, but also for links from other sites. Link rot is something large publisher must try to avoid.

Another important feature was search. The RNZ site search indexes all text on all assets in the system, and can present a search as a single set of results. An search can return a mix of news, audio (only the associated text), and programme episodes. It also includes any tags that match the results.

The key design criteria were fast, accurate and scalable. All searches are fast, even with nearly a million assets. The design of this was based on internal search engine I wrote for the RNZ intranet, called BRAD. I wrote about an early version of that tool for Linux Journal in 2005.

News Publishing

For news, speed is the most important feature of any editing and publishing system. RNZ uses a tool called iNews to create and edit news copy, and for a range of other broadcast related tasks. It is very fast, easy to learn, and is tightly integrated into the news operation. There are hundreds of macros, designed by staff over many years that dramatically speed up many operations at the touch of a key.

The browser-based editing and workflow we had available to us in 2005 was vastly inferior to this desktop tool, so I made the decision to adapt iNews to handle publishing to the site. There was one problem though—the only way to get content out of iNews was via file transfer protocol (FTP), one story at a time.

We built an application that received these stories, processed them, and updated the CMS via an API. This application was able to create, update, and delete stories based on the formatting of stories created in iNews. This small application ran continuously without errors from 2005 to 2016, and I am guessing it is probably still being used today.

In MySource Matrix, the time to live for a story in the top 5 was up to five minutes, other stories tools up to 20 minutes to update, these times being dictated by cache times and performance trade-offs inside the CMS software.

This wasn’t at the performance level we needed, and we were able to work closely with our sysadmin to build some intelligence into the cache expiration that was more closely aligned with our publishing cycle.

When we migrated to Rails I enhanced this system of working, created a more powerful API, rewrote the publishing engine in Ruby, and it was extended and enhanced up until the time I left.

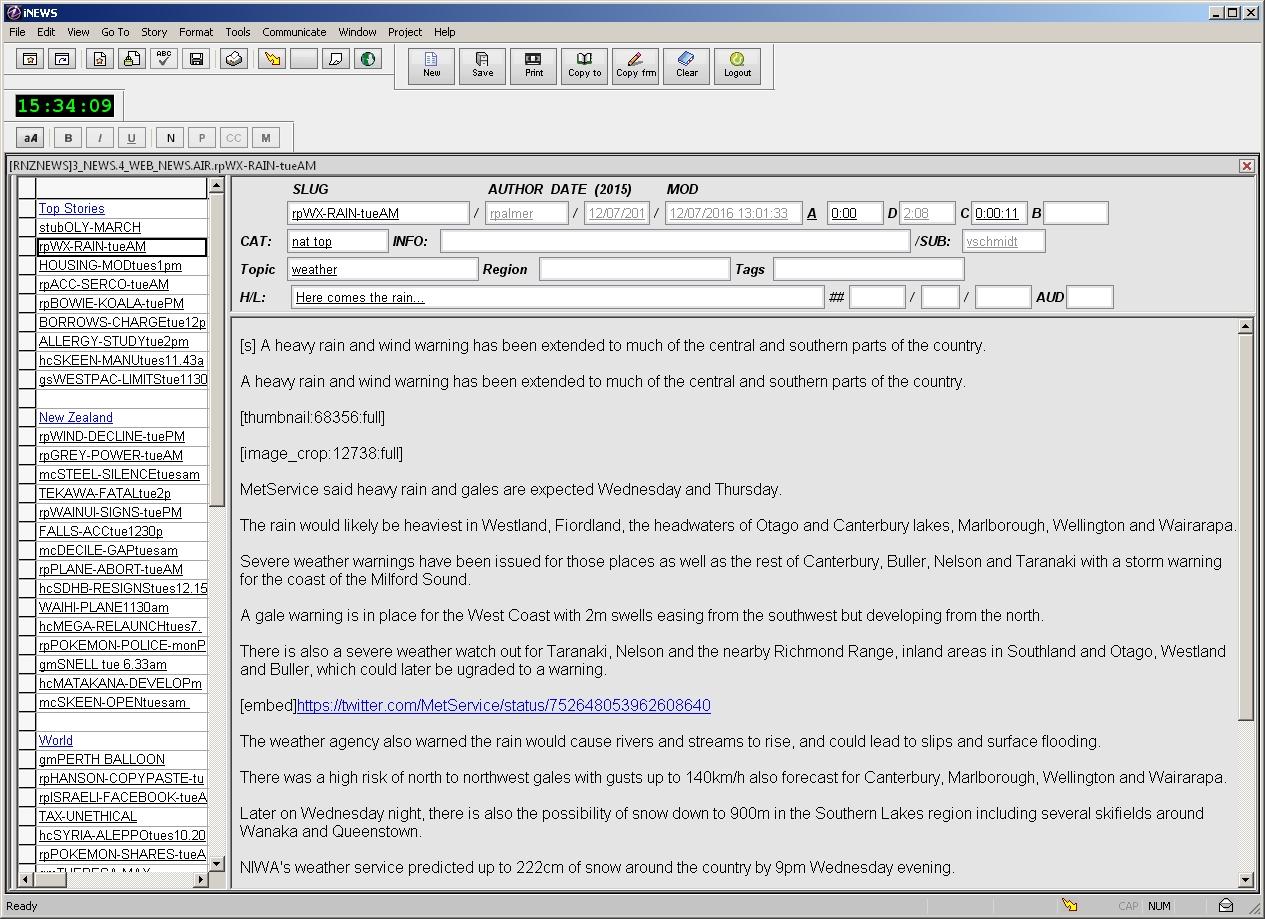

To edit and publish, News staff order stories in a queue and publish all stories (and the order specified), or just one story (updating just the content) at the press of one key. They can assign categories, tags, and can also remove stories if needed. You can see in this screen shot that the story is in the ‘top’ and ‘nat’ (National) categories, and has ‘weather’ as its topic tag.

At first glance this seemed an odd was of doing things and it looks clunky, but when people got used to it they soon found out how fast it was. iNews is a native Windows desktop application and is very fast to use. Any number of new stories could be added, the order of stories changed, and these changes are then published together in one bulk operation. It took about 30 seconds to update 60 stories in what was called a ‘full publish’.

Updating a single story from iNews took about five seconds to pass through the system and be live on the site. This was great for fast changing news, as editors were able to update a running story as frequently as they wanted with no penalty.

I did have plans to move the creation and editing of news directly in ELF. The critical part of this project was creating a workflow that felt natural for editors and that ran fast enough in the browser to keep up with the pace of writing and copy-editing. I did quite a lot of planning towards this over several years, and it was very tempting to post the designs for this from memory as part of this series!

The big problem to solve can be summed up in two words: browser lag. Creating a good workflow for editors would require a single page application (SPA) of some sort, and this would need to be optimised for speed. Many of these SPA editors look good and have all the features, but often cannot keep up with the speed of fast touch-typist. The React-based editor I am using right now to type this post cannot even keep up with me!

This lag is a major usability issue for writers and editors working at speed, and needed to be solved for the solution to be viable. The other big requirement was customised keystrokes for common operations, or sequences of operations, and some sort of integration with the iNews system. By 2016 the focus was on other things, and we also did not have the resources to tackle it.

For what it is worth, this could be done using a modern front-end technology like svelte and keeping things lean. Svelte has superior speed, reactivity (which is critical for a smooth user experience), size, and ease of development compared with React. The added bonus is that svelte is quickly becoming the preferred framework for creating interactives for story-telling in many news organisations, so this could be leveraged to make content for the public.

In the last part I will tackle growing the audience, leading transformation, and provide a summary of takeaways.

Leave a comment